By Kade Crockford, director of the Technology for Liberty Project at the ACLU of Massachusetts

Many of us wear masks on Halloween for fun. But what about a world in which we have to wear a mask every single day to preserve our privacy from the government’s oppressive eye?

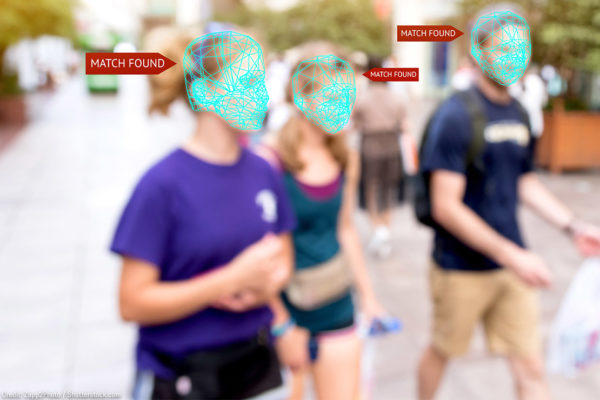

Face recognition surveillance technology has already made that frightening world a reality in Hong Kong, and it’s quickly becoming a scary possibility in the United States.

The FBI is currently collecting data about our faces, irises, walking patterns, and voices, permitting the government to pervasively identify, track, and monitor us. The agency can match or request a match of our faces against at least 640 million images of adults living in the U.S. And it is reportedly piloting Amazon’s flawed face recognition surveillance technology.

Face and other biometric surveillance technologies can enable undetectable, persistent, and suspicionless surveillance on an unprecedented scale. When placed in the hands of the FBI — an unaccountable, deregulated, secretive intelligence agency with an unresolved history of anti-Black racism — there is even more reason for alarm. And when that agency stonewalls our requests for information about how its agents are tracking and monitoring our faces, we should all be concerned.

That’s why today we’re asking a federal court to intervene and order the FBI and related agencies to turn over all records concerning their use of face recognition technology.

The FBI’s troubling political policing practices underscore the urgent need for transparency. Under the leadership of the agency’s patriarch — the disgraced J. Edgar Hoover — the FBI obsessively spied on left-wing, Indigenous rights, anti-war, and Black power activists across the country. Hoover infamously tried to blackmail Martin Luther King, Jr., encouraging the civil rights leader to kill himself to avoid the shame Hoover’s leaks to journalists would bring to him and his family. The FBI was also involved in the 1969 killing of Fred Hampton, a brilliant Chicago leader in the Black Panther Party who was assassinated by Chicago Police while he lay asleep in his bed next to his pregnant girlfriend.

While Hoover’s reign may be history, the FBI’s campaign against domestic dissent is not.

Since at least 2010, the FBI has monitored civil society groups, including racial justice movements, Occupy Wall Street, environmentalists, Palestinian solidarity activists, Abolish ICE protesters, and Cuba and Iran normalization proponents. In recent years, the FBI has wasted considerable resources to spy on Black activists, who the agency labeled “Black Identity Extremists” to justify even more surveillance of the Black Lives Matter movement and other fights for racial justice. The agency has also investigated climate justice activists including 350.org and the Standing Rock water protectors under the banner of protecting national security.

Because of the FBI’s secrecy, little is known about how the agency is supercharging its surveillance activities with face recognition technology. But what little is known from public reporting, the FBI’s own admissions to Congress, and independent tests of the technology gives ample reason to be concerned.

For instance, the FBI recently claimed to Congress that the agency does not need to demonstrate probable cause of criminal activity before using its face surveillance technology on us. FBI witnesses at a recent hearing also could not confirm whether the agency is meeting its constitutional obligations to inform criminal defendants when the agency has used the tech to identify them. The failure to inform people when face recognition technology is used against them in a criminal case, or the failure to turn over robust information about the technology’s error rates, source code, and algorithmic training data, robs defendants of their due process rights to a fair trial.

This lack of transparency would be frightening enough if the technology worked. But it doesn’t: Numerous studies have shown face surveillance technology is prone to significant racial and gender bias. One peer-reviewed study from MIT found that face recognition technology can misclassify the faces of dark skinned women up to 35 percent of the time. Another study found that so-called “emotion recognition” software identified Black men as more angry and contemptuous than their white peers. Other researchers have found that face surveillance algorithms discriminate against transgender and gender nonconforming people. When our freedoms and rights are on the line, one false match is too many.

Of course, even in the highly unlikely event that face recognition technology were to become 100 percent accurate, the technology’s threat to our privacy rights and civil liberties remains extraordinary. This dystopian surveillance technology threatens to fundamentally alter our free society into one where we’re treated as suspects to be tracked and monitored by the government 24/7.

That’s why a number of cities and states are taking action to prevent the spread of ubiquitous face surveillance, and why law enforcement agencies, at minimum, must come clean about when, where, and how they are using face recognition technology. There can be no accountability if there is no transparency.

Learn more about the case

Date

Thursday, October 31, 2019 - 11:00am

Featured image

Show featured image

Hide banner image

Override site-wide featured action/member

Press pause on face surveillance

Related issues

Privacy and Surveillance

Government Transparency

Show related content

Tweet Text

[node:title]

Type

Menu parent dynamic listing

Show PDF in viewer on page

Style

Standard with sidebar

Face surveillance systems are currently being used by the government in Massachusetts, despite the absence of privacy protections or even legislative authorization. Communities across the state are rallying and organizing to push back, to protect privacy, racial justice, and civil rights in the digital age. The week of October 21 there are three important hearings across the state on this critical issue. Here’s how to get involved.

Monday, October 21, 2019, 6:00 PM | Springfield Face Surveillance Moratorium Vote

Springfield City Hall, 36 Court St, Springfield, MA 01103

- City Councilors in Springfield will vote on a measure to place a moratorium on government use of face surveillance technology. Please take action and spread the word. If you can, attend the meeting to show support for the moratorium.

Tuesday, October 22, 2019, 1:00 PM | Statewide Face Surveillance Moratorium Hearing

Massachusetts State House (Hearing Room A-1); 24 Beacon Street, Boston, MA 02133

Wednesday, October 23, 2019, 6:00 PM | Brookline Public Safety Meeting

Brookline Town Hall, 333 Washington St, Brookline, MA 02445

-

Members of the Brookline Public Safety Committee will meet to discuss a proposed face surveillance ban for Town employees. Please raise your voice in support of this measure, and if you’re able, come out to the meeting to tell the Committee to support Warrant Article 25.

Springfield Face Surveillance Moratorium

Ongoing action

- City Councilors in Springfield will vote in November on a measure to place a moratorium on government use of face surveillance technology. Please take action and spread the word. (Note: This post originally asked folks to attend a City Council meeting Monday October 21 to support a vote on this measure. The meeting has been changed, and pushed back to November. We will update this website when we learn the exact date of the vote.)

This technology affects everyone in Massachusetts, and has the potential to radically reshape our future. Everyone should have a chance to weigh in.

Learn more

Questions about the campaign or how to get involved? Email Emiliano Falcon at efalcon@aclum.org.

Date

Thursday, October 17, 2019 - 12:45pm

Featured image

Show featured image

Hide banner image

Override default banner image

Override site-wide featured action/member

Press pause on face surveillance

Related issues

Privacy and Surveillance

Show related content

Tweet Text

[node:title]

Share Image

Type

Menu parent dynamic listing

Show PDF in viewer on page

Style

Standard with sidebar

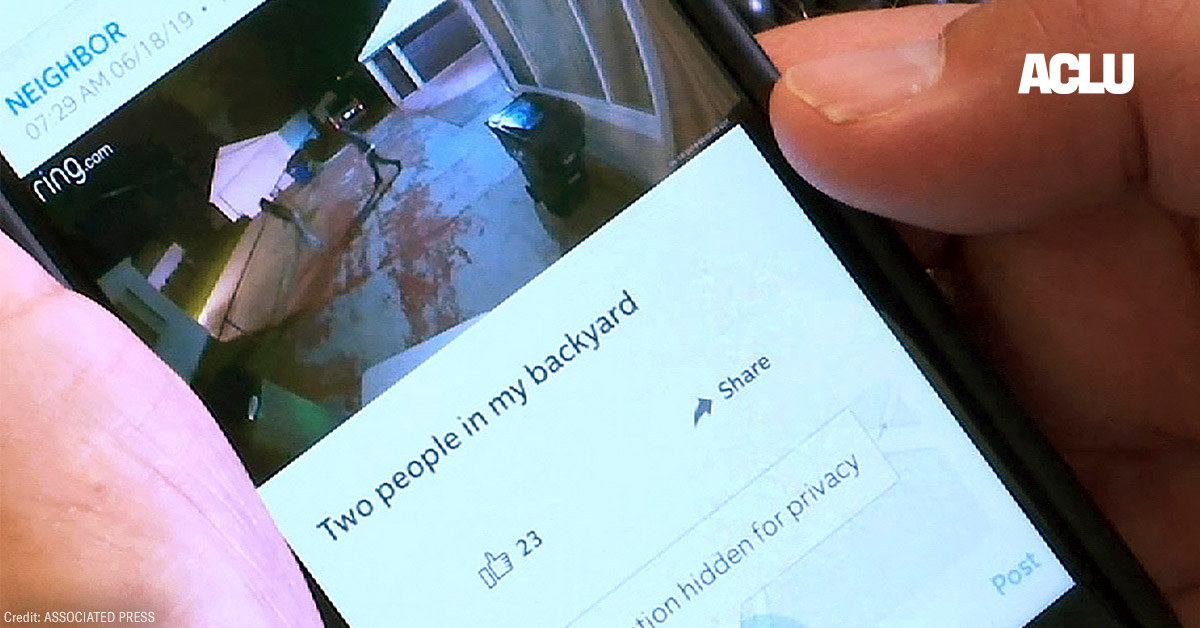

Around the country, local police are teaming up with Amazon and its subsidiary Ring to push people to install doorbell cameras outside their residences, leveraging this technology into a new kind of surveillance network. What should we think about this trend, both as consumers who might be contemplating a doorbell camera purchase and as human beings who don’t want to live in a Big Brother world?

Pushing fear and surveillance

First of all, it’s spooky and disconcerting to see one of our largest and most prominent companies teaming up with law enforcement to push surveillance technology on American communities. Recent reporting has revealed that Amazon helps police departments give free or discounted Ring cameras to residents, gives police a portal for requesting video and special access to their social app “Neighbors” (which helps neighbors share video clips), and coaches police departments on how to get users to agree to turn over the footage. In turn, some police departments have agreed to promote Ring cameras and the Neighbors app and, according to a document obtained by Gizmodo, even to give the company the right to approve or censor what police departments say about Ring. Amazon has contracts with over 400 police departments around the country. (Ring has released a map of those departments.)

Police officers are public servants — and not just any public servants but ones entrusted with enormous powers including, in certain situations, the power to ruin people by invading their privacy. Allowing officers to serve as a de facto sales force for a company selling surveillance devices is a betrayal of police departments’ duty to serve the public first. It is also a betrayal to allow that company to shape how departments communicate with the public about their technology programs.

Crime rates today are at historic lows. As the Brennan Center puts it, Americans are “safer than they have been at almost any time in the past 25 years.” Yet Ring has used fear-mongering advertising and information campaigns to drum up sales. As USA Today points out, “Amazon's promotional videos show people lurking around homes, and the company recently posted a job opening for a managing news editor to ‘deliver breaking crime news alerts to our neighbors.’” Amazon also asks police departments to give the company special real-time access to emergency dispatch (911) data so that it can pump out these crime alerts all the more efficiently, giving people an exaggerated sense of crime in their community. (This is also another example of a weirdly close interconnection between police and this giant company.) The result is that, as the Washington Post puts it, the “Neighbors feed operates like an endless stream of local suspicion.”

Let’s be clear: individuals have a First Amendment right to take photographs of things that are plainly visible in public places, including government facilities, infrastructure, and police officers in public (all of which rights we at the ACLU have defended in court after individuals were illegally arrested). They certainly have a right to photograph their property in front of their front door.

All that said, more pervasive private cameras do erode our privacy, and it is dismaying to see two powerful institutions in American life (Amazon and law enforcement) so actively and concertedly pursuing their mutual interest in saturating American communities with surveillance cameras.

Centralizing video in the cloud

When they first began to proliferate, we pointed out that distributed private surveillance cameras are better than centralized government surveillance networks for privacy and civil liberties. Distributed and isolated private cameras, we reasoned, deprive the government of suspicionless mass access to footage and the ability to do mass face recognition, wide-area tracking, and other analytics on that footage. They also place a middleman between the government and surveillance recordings, not only requiring police to go through a process to access particular footage, but also make it possible that, if the police try to request footage for abusive or unclear purposes, at least some owners may decide not to comply. (If the police have probable cause to believe that a camera contains evidence of a crime and the owner refuses to turn it over, they can go to a judge and get a warrant.)

However, the rise of cloud-connected cameras threatens to reverse the advantages of decentralized surveillance by re-centralizing the video collected across a community and removing the intermediary role that residents can play in checking surveillance abuses. Police officers have been quoted in press accounts as saying that when residents won’t agree to share video with the authorities, police can go to Ring and “just bypass them.” Amazon says that its policy is not to share video with police without user permission or a valid search warrant. But that’s Amazon’s policy according to Amazon, which leaves customers dependent on their policies and promises.

Unlike with video stored at home, a Ring customer never really can be sure with whom cloud-stored video is being shared. We know that Ring gave workers access to every Ring camera in the world together with customer details. Other companies offering similar services have also granted such access including Google, Microsoft, Apple, and Facebook.

Ring’s Neighbors App has also raised issues of racial profiling, for example, when individuals use the social app to circulate video to their neighbors of people who they deem suspicious because of their race. (“There’s somebody in our neighborhood who doesn’t belong.”) That can have the effect of intensifying the effects of racism and raises the question of how and whether police account for that racism in how they interact with the platform.

Another problem is that many people are not going to feel like they can say no to law enforcement. A police “request” of a resident to voluntarily turn over video footage will never be entirely uncolored by coercion and will often come across as a demand. Ring’s email asking customers to share video, for example, sounds much more like a directive than a request. It includes “A message from Detective Smith” declaring, “This is a time-sensitive matter, so please review and share your Ring videos as soon as possible.” Besides, some police departments have, in return for camera giveaways, reportedly required recipients to agree to turn over any camera footage on request. (Amazon says it does not support such agreements, but it’s unclear how much say the company has on the matter.)

Localities should reject agreements between Ring and their police departments. And, at a minimum, residents should scrutinize those arrangements closely and ask hard questions about what kind of community they want to create, with how much surveillance, and how a Ring partnership will play into that vision. As always, we also recommend that communities enact a law requiring their police departments to be transparent about, and receive permission for, any police use of surveillance technology (which would include agreements with Ring).

Do you really want a doorbell camera?

Should you buy a doorbell camera? This is an individual decision and no doubt it can be a handy device for some people. But if you’re thinking about installing one, you should go in with your eyes wide open — especially if you’re considering a camera that is connected to the Internet.

- Consider the risks. People often buy cameras because they are seeking empowerment and control over what happens in their homes. But consumers need to be aware that such power can be flipped against you. Internet-connected home cameras carry all the risks of other “Internet of things” devices: the risk of exposing elements of your home life to the government, to the company that makes your device, and to hackers. Researchers have already found a major vulnerability (now fixed) in the Ring doorbell. And keep in mind that while a doorbell cam will record others at your front door, it will also record your own comings and goings and “pattern of life” and those of your family members.

- Be clear on what you want a doorbell camera for. Do you just want a tool to tell you who is or was at your door? Or do you want an app that has been conceived, designed, and marketed as a police-empowering “crime-stopping mission”? Ring, for one, is clear that it aims to be the latter: company founder Jamie Siminoff writes in grand terms of crime reduction being a “clear and enduring” mission for his company. Ring also describes its anti-crime mission using worrisomely militaristic rhetoric of “going to war.” You may want a camera for personal convenience or security, but do you also want to contribute to the creation of a broad surveillance infrastructure in your community that may be used in abusive ways?

- Consider whether you want cloud storage. As the saying goes, “There is no cloud; it’s just somebody else’s computer.” When you take video and store it on a device in your home, you have total control over it: how long it’s kept, whom it’s shared with, and when (unless the police come knocking with a warrant signed by a judge). But once you store that video in the cloud, you reduce your own physical and legal control over your camera and increase the power of companies and government. Ring’s cameras allow owners to view live video but do not let them review, share, or save videos unless they get a cloud subscription. If you choose to buy a camera, consider buying one with local storage of video data, which reviewers indicate is just as cheap and easy-to-use as cloud-based cameras.

- Consider the ethical issues. If you choose to install a camera, think about where you point your camera and what it records. Do you need to record public areas like sidewalks and streets? Some cameras allow you to virtually block out parts of a field of view so they are not recorded. Some people install the cameras using a wedge to control the direction it points. Don’t record your neighbors’ property. And when and if capabilities such as face surveillance and video analytics become available, consider not deploying those. Amazon has already applied for a patent revealing the company is at a minimum thinking about how to combine their Ring camera infrastructure with their Rekognition face surveillance infrastructure, and Ring has also researched such efforts.

Surveillance is always a matter of power. Generally speaking, it increases the power of those doing the surveillance at the cost of those who are subject to it. People buy cameras because they want to empower themselves by seeing who is at their door — a legitimate aim. But as with all power, consumers should use the power of surveillance responsibly — and beware that the tool doesn’t get turned on them and end up empowering somebody else.

Blog by Jay Stanley, Senior Policy Analyst, ACLU Speech, Privacy, and Technology Project.

Date

Tuesday, September 10, 2019 - 7:30am

Featured image

Show featured image

Hide banner image

Related issues

Privacy and Surveillance

Show related content

Tweet Text

[node:title]

Share Image

Type

Menu parent dynamic listing

Show PDF in viewer on page

Style

Standard with sidebar

Pages